About the Ratings

CTR's Generic Rubric: An Attempt to Quantify "Quality"

We believe that a review is the start of a conversation about the quality of any material used with children, and it is important to acknowledge up front that any two people are going to have different definitions of quality.

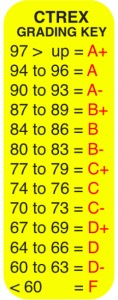

Children’s Technology Review is an ongoing survey of children’s interactive media. The survey is stored in a large database which you can search online. To get a program’s letter grade, percentage, or 1 to 5 star rating, you need to do some simple math; a process that is automated for reviewers who use the CTR internal slider instrument, but is defined in detail below, for replication purposes.

Add up the points in each category (always = 1 point, some extent = .5 points, never = 0 points, and N.A. = Not Applicable) and then divide by the number of items in the category. This number can then be converted to your scale of choice — either a percent or 0 to 5 point scale.

When reviewing any interactive product, it is important to match the instrument with the type of software. In other words, you can’t rate a program low in “Educational Value” if it is intended to be a fictional video game. Even the word “Educational” must be defined. Whose definition of education is being used as a standard? What is the educational philosophy that is most valued? Is the product intended for classroom or home use? That’s why the “N.A.” (Not Applicable) option comes in; and reviewers must be trained in the art of using, or not using it.

It is important to consider when the review was written. A highly rated program in 2003 might be equal to a poorly rated program in the context of current multi-touch screen technology.

CTR’s instrument was constructed to be used as a general framework. The final grade or number rating is only meaningful if it is taken in the context of the current market at the time the product is being sold.

HISTORY

Children’s Technology Review began in 1985 as part of an attempt to survey all children’s interactive media products (See Buckleitner, 1986, A Survey of Early Childhood Software, High/Scope Press).

Originally a Master’s Thesis, the work became part of a doctoral dissertation, and provides core reviews for CTR’s weekly and monthly publications, and other media outlets.

The reviews, grades, and ratings are housed in an internal database with 17,000+ commercial products (as of 2021) for approximately 20 platforms (ie: Mac OSX, Windows, iOS, Android, Nintendo, Playstation, Xbox, etc…).

Paid subscribers have full online access to this database. As a result, this work is self-funded.

NOTES ON ASSIGNING GRADES OR RATINGS TO COMPLEX INTERACTIVE PRODUCTS

We urge our readers to consider that the process of assigning quantitative ratings to an interactive product is imprecise, and that we review every review as “the start of a conversation.” For those interested in this, see the article “What (exactly) is an App?”. This is especially true because the digital code that makes up an interactive product continually evolves (which is why we date our reviews and award seals). In our attempt to be “the least worst review system” the following measures are taken:

- Whenever possible (but not in all cases), child testing is conducted.

- We use a series of rubrics, and every item contains an “NA” option, so the reviewer has the option to not consider a particular item.

- CTR’s theoretical bias, and underlying justification for definitions of “quality” are clearly articulated, and supported by a research base.

- Reviews are dated and updated.

- Editorial guidelines are followed for handling press junkets, gifts and editorial copies. As long time contributors to the New York Times, we have adopted the same editorial guidelines.

- Errors and/or corrections to reviews are posted here.

- The author of the review is provided.

- Disclosures are provided, so that any potential conflict of interest is transparent to the reader.

- Contacting the managing editor is a straightforward process.

- CTR does not sell award seals.

- CTR does not make money from iTunes or Google Play partnership links.

- CTR editors make every effort to remain blind to pre-existing reviews (e.g., in iTunes, Amazon.com, or other review sites). Our reviews reflect the instrument.

- External references are cited.

Articles on the Rating Process

What (Exactly) is an App?February 25, 2015

Examines interactive media from a social-cultural point of view, considering the “culture” and expectations of the people behind the app.

Are These Ratings Real?

July 13, 2013

We’re not going to accuse somebody of posting fake ratings. But when a poorly designed app gets 23 five star ratings, things start to look fishy. If you’re the publisher and you’d like explain what’s going on…

10,500 Objective Reviews, At Your Fingertips

July 25, 2012

CTR reviews are easier to find, and they look better, too. The improved format includes a cleaner, easier-to-browse layout and one-click search scripts, making it possible to zoom in on the reviews you want. The database is available to only CTR subscribers.

CTR, May 2012: Low Ratings and Sad Faces

May 18, 2012

We’re always sorry to give any product a less-than-glowing-review, but like a doctor that tells you need to lose a few pounds, our job can’t involve hurt feelings. Will our rating of a product change? Not unless the product does, and that leads our readers to a question we think about a lot. “How can a product earn five stars?”

How Does a Product Get a Five-Star Rating?

October 3, 2011

I spoke on a panel on the evaluation of interactive media at the Fred Rogers Center, and I referred to this page, from the September 2011 issue of Children’s Technology Review. I issued a strong disclaimer that every theory can find a champion in technology — in other words, one person’s view of quality can (and should) differ from another person’s view. As Jesse Schell (of CMU) reminded the group, measuring quality in an interactive product is like trying to assess beauty….

Many older products that we’ve reviewed are stored at the Strong Museum of Play, where they can be used for research.

The six categories in the instrument can help you better understand factors that may be related to “quality” in a children’s interactive media product. In brief, the instrument favors software that is easy to use, child controlled, has solid educational content, is engaging and fun and is designed with features that you’d expect to see, and is worth the money given the current state of children’s interactive media publishing.

CTR editors typically recommend programs that receive a 4.2 star rating or better; a product must get at least a 4.4 rating to get an Editor’s Choice seal. You can easily find these titles on our online database.

CHILDREN’S INTERACTIVE MEDIA RATING INSTRUMENT

Key:N = Not Applicable, or 0 points.

SE = Some Extent, or .5 points

A = Always, or 1 point

NA = Not counted in the calculation

I. Ease of Use (Can a child can use it with minimal help?)

Note that this factor is combined with “Childproof” on the short form of this instrument.N SE A NA

__ __ __ __ Skills needed to operate the program are in range of the child

__ __ __ __ Children can use the program independently after the first use

__ __ __ __ Accessing key menus is straightforward

__ __ __ __ Reading ability is not prerequisite to using the program

__ __ __ __ Graphics make sense to the intended user

__ __ __ __ Printing routines are simple

__ __ __ __ It is easy to get into or out of any activity at any point

__ __ __ __ Getting to the first menu is quick and easy

__ __ __ __ Controls are responsive to the touch

__ __ __ __ Written materials are helpful

__ __ __ __ Instructions can be reviewed on the screen, if necessary

__ __ __ __ Children know if they make a mistake

__ __ __ __ Icons are large and easy to select with a moving cursor

__ __ __ __ Installation procedure is straightforward and easy to do

II. Childproof (Is it designed with “child-reality” in mind?)

Note that this factor is combined with I. in the short form of this instrument.N SE A NA

__ __ __ __ Survives the “pound on the keyboard” test; more recently, the digital playdoh test

__ __ __ __ Offers quick, clear, obvious response to a child's action

__ __ __ __ The child has control over the rate of display

__ __ __ __ The child has control over exiting at any time

__ __ __ __ The child has control over the order of the display

__ __ __ __ Title screen sequence is brief or can be bypassed

__ __ __ __ When a child holds a key down, only one input is sent to the computer

__ __ __ __ Files not intended for children are safe

__ __ __ __ Children know when they’ve made a mistake

__ __ __ __ This program would operate smoothly in a home or classroom setting

III. Educational

What can a child learn from this program? What do they walk away from the experience with, that they didn’t have when they first came to the experience?N SE A NA

__ __ __ __ Offers a good presentation of one or more content areas

__ __ __ __ Graphics do not detract from the program’s educational intentions

__ __ __ __ Feedback employs meaningful graphic and sound capabilities

__ __ __ __ Speech is used

__ __ __ __ The presentation is novel with each use

__ __ __ __ Good challenge range (this program will grow with the child)

__ __ __ __ Feedback reinforces content (embedded reinforcements are used)

__ __ __ __ Program elements match direct experiences

__ __ __ __ Content is free from gender bias

__ __ __ __ Content is free from ethnic bias

__ __ __ __ A child’s ideas can be incorporated into the program

__ __ __ __ The program comes with strategies to extend the learning

__ __ __ __ There is a sufficient amount of content

IV. Entertaining

Is this program fun to use?N SE A NA

__ __ __ __ The program is enjoyable to use

__ __ __ __ Graphics are meaningful and enjoyed by children

__ __ __ __ This program is appealing to a wide audience

__ __ __ __ Children return to this program time after time

__ __ __ __ Random generation techniques are employed in the design

__ __ __ __ Speech and sounds are meaningful to children

__ __ __ __ Challenge is fluid, or a child can select their own level

__ __ __ __ The program is responsive to a child’s actions

__ __ __ __ The theme of the program is meaningful to children

V. Design Features

How “smart” is this program?N SE A NA

__ __ __ __ The program has speech capacity

__ __ __ __ The program has printing capacity

__ __ __ __ Keeps records of child’s work

__ __ __ __ ”Branches” automatically: challenge level is fluid

__ __ __ __ A child’s ideas can be incorporated into the program

__ __ __ __ Sound can be toggled or adjusted

__ __ __ __ Feedback is customized in some way to the individual child

__ __ __ __ Program keeps a history of the child’s use over a period of time

__ __ __ __ Teacher/parent options are easy to find and use

VI. Value (How much does it cost vs. what it does? Is it worth it?)

Considering the factors rated above, and the average retail price of software, rate this program’s relative value considering the current software market.

PROCEDURE FOR GENERATING A STAR RATING:

We use an automated set of calculated fields to generate the ratings. First count the number of items in the category, then add up the total points. Divide, and multiply by 100 to get the percent. You can divide by .5 to convert the overall percent to the 1 to 5 star rating. Consider also any extra hardware attachments required to get full potential of the programming, e.g., a sound card, CD-ROM, etc.

Copyright 1985, by Warren Buckleitner

Don’t forget that this form is generic! To use it properly, you have to look at a lot of similarly designed products, and remember that the “NA” field can be a particularly powerful tool to influence the overall score. And there is no substitute for child testing.

CTR User Ratings:

This is a fast, gut-level rating of this product on a scale of 0 1o 100, bad to good, or “dust or magic.” In making this assessment, consider:

- How does it compare with competitive products?

- Ease of use

- Innovation and unique features

- If you spent your own money on this product, would you be happy with it?